What do you think? Is not caring about whether you're telling the truth worse than deliberately lying?

Wednesday, May 5, 2010

BS

What do you think? Is not caring about whether you're telling the truth worse than deliberately lying?

Sunday, May 2, 2010

Homework #3

- First, very briefly explain the argument that the ad offers to sell its product.

- Then, list and explain the mistakes in reasoning that the ad commits.

- Then, list and explain the psychological ploys the ad uses (what psychological impediments does the ad try to exploit?).

- Attach (if it's from a newspaper or magazine) or briefly explain the ad.

Friday, April 30, 2010

Intellectual Humility

Getting us to care is the real goal. We should care about good evidence. We should care about evidence and arguments because they get us closer to the truth. When we judge an argument to be overall good, THE POWER OF LOGIC COMPELS US to believe the conclusion. If we are presented with decent evidence for some claim, but still stubbornly disagree with this claim for no strong reason, we are just being irrational. Worse, we’re effectively saying that the truth doesn’t matter to us.

Instead of resisting, we should be open-minded. We should be willing to challenge ourselves--seriously challenge ourselves--and allow new evidence change our current beliefs if it warrants it. We should be open to the possibility that we’ve currently gotten something wrong. This is how comedian Todd Glass puts it:

Here are the first two paragraphs of an interesting article on this:

Last week, I jokingly asked a health club acquaintance whether he would change his mind about his choice for president if presented with sufficient facts that contradicted his present beliefs. He responded with utter confidence. “Absolutely not,” he said. “No new facts will change my mind because I know that these facts are correct.”

I was floored. In his brief rebuttal, he blindly demonstrated overconfidence in his own ideas and the inability to consider how new facts might alter a presently cherished opinion. Worse, he seemed unaware of how irrational his response might appear to others. It’s clear, I thought, that carefully constructed arguments and presentation of irrefutable evidence will not change this man’s mind.

Ironically, having extreme confidence in oneself is often a sign of ignorance. Remember, in many cases, such stubborn certainty is unwarranted.

Wednesday, April 28, 2010

Metacognition

There's a name for all the studying of our natural thinking styles we've been doing in class lately: metacognition. When we think about the ways we think, we can vastly improve our learning abilities. This is what the Owning Our Ignorance club is about.

There's a name for all the studying of our natural thinking styles we've been doing in class lately: metacognition. When we think about the ways we think, we can vastly improve our learning abilities. This is what the Owning Our Ignorance club is about.I think this is the most valuable concept we're learning all semester. So if you read any links, I hope it's these two:

Tuesday, April 27, 2010

Practical Advice

Here are two other big, simple points I think make for some great practical advice:

- A

ctively seek out sources that you disagree with. We tend to surround ourselves with like-minded people and consume like-minded media. This hurts our chances of discovering that we've made a mistake. In effect, it puts up a wall of rationalization around our preexisting beliefs to protect them from any countervailing evidence.

ctively seek out sources that you disagree with. We tend to surround ourselves with like-minded people and consume like-minded media. This hurts our chances of discovering that we've made a mistake. In effect, it puts up a wall of rationalization around our preexisting beliefs to protect them from any countervailing evidence. - When we do check out our opponents, it tends to be the obviously fallacious straw men rather than sophisticated sources that could legitimately challenge our beliefs. But this is bad! We should focus on the best points in the arguments against what you believe. Our opponents' good points are worth more attention than their obviously bad points. Yet we often focus on their mistakes rather than the reasons that hurt our case the most.

Monday, April 26, 2010

Let's All Nonconform Together

- On the influence of your in-groups and the formation of your identity: "If you want to set yourself apart from other people, you have to do things that are arbitrary, and believe things that are false." (from Paul Graham's "Lies We Tell Our Kids.")

- Here's a summary of two recent studies which suggest that partisan mindset stems from a feeling of moral superiority.

- Here's that poll showing the Republican-Democrat switcharoo regarding their opinion of Fed Chairman Ben Bernanke when the executive office changed parties.

- Our political loyalties also influence our view on the economy.

- Here's an article about a cool study on the relationship between risk and provincialism.

- Conformity hurts the advancement of science.

Friday, April 23, 2010

Status Quo Bias

- If it already exists, we assume it's good.

- Our mind works like a computer that depends on cached responses to thoughtlessly complete common patterns.

- NYU psychologist John Jost does a lot of work on system justification theory: our tendency to unconsciously rationalize the status quo, especially unjust social institutions. Scarily, those of us oppressed by such institutions have a stronger tendency to justify their existence.

- Jost has a new book on this stuff. Here's a video dialogue about his research:

Wednesday, April 21, 2010

Wished Pots Never Boil

- If you're a fan of The Secret, you should beware that it's basic message is wishful thinking run amok.

- Teachers have biases, too: we're self-serving and play favorites.

- Why don't we give more aid to those in need? Psychological impediments are at least partly to blame.

- Why do we believe medical myths (like "vitamin C cures the common cold," or "you should drink 8 glasses of water a day")? Psychological impediments, of course!

Monday, April 19, 2010

Second-Hand News

Sunday, April 18, 2010

The Smart Bias

“Many of us would like to believe that intellect banishes prejudice. Sadly, this is itself a prejudice.”

Saturday, April 17, 2010

No, You're Not

You've probably noticed that one of my favorite blogs is Overcoming Bias. Their mission statement is sublimely anti-I'M-SPECIAL-ist:

This may sound insulting, but one of the goals of this class is getting us to recognize that we're not as smart as we think we are. All of us. You. Me! That one. You again. Me again!"How can we better believe what is true? While it is of course useful to seek and study relevant information, our minds are full of natural tendencies to bias our beliefs via overconfidence, wishful thinking, and so on. Worse, our minds seem to have a natural tendency to convince us that we are aware of and have adequately corrected for such biases, when we have done no such thing."

So I hope you'll join the campaign to end I'M-SPECIAL-ism.

Friday, April 16, 2010

The Importance of Being Stochastic

Anyway, a few links:

- I brought up this article before, but I'll mention it again: most of us are pretty bad at statistical reasoning.

- That radio show I love recently devoted an entire episode to probability:

- Here's a review of a decent book (The Drunkard's Walk: How Randomness Rules Our Lives) on our tendency to misinterpret randomness as if it's an intentional pattern.

- This ability to see patterns where there are none may explain why so many of us believe in god (see section 5 in particular).

- What was that infinite monkey typewriter thing we were talking about in class?

- What's up with that recent recommendation that routine screenings for breast cancer should wait to your 50s rather than 40s? Math helps explain it.

- Another radio show I love ran a great 2-part series on the screening for diseases called "You Are Pre-Diseased":

Listen to Episode 1

Listen to Episode 1

Listen to Episode 2

Listen to Episode 2

- Statistics in sports is all the rage lately. It can justify counterintuitive decisions, like going for it instead of punting on 4th down... though don't expect the fans to buy that fancy math learnin'.

Thursday, April 15, 2010

Quiz #2

- Fallacies (starting with appeal to ignorance to the end of chapter 5)

- Psychological Impediments (chapter 4)

Wednesday, April 14, 2010

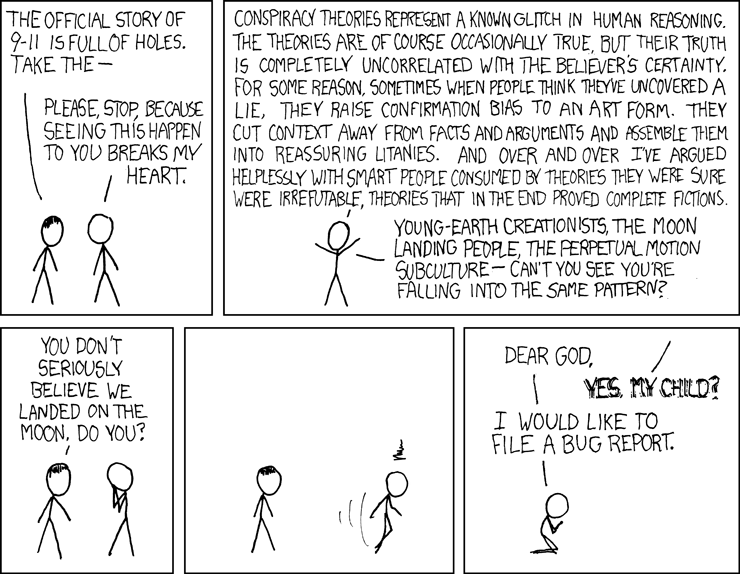

The Conspiracy Bug

While there are a handful of Web sites that seek to debunk the claims of Mr. Jones and others in the movement, most mainstream scientists, in fact, have not seen fit to engage them.And one more excerpt on reasons to be skeptical of conspiracy theories in general:

"There's nothing to debunk," says Zdenek P. Bazant, a professor of civil and environmental engineering at Northwestern University and the author of the first peer-reviewed paper on the World Trade Center collapses.

"It's a non-issue," says Sivaraj Shyam-Sunder, a lead investigator for the National Institute of Standards and Technology's study of the collapses.

Ross B. Corotis, a professor of civil engineering at the University of Colorado at Boulder and a member of the editorial board at the journal Structural Safety, says that most engineers are pretty settled on what happened at the World Trade Center. "There's not really disagreement as to what happened for 99 percent of the details," he says.

One of the most common intuitive problems people have with conspiracy theories is that they require positing such complicated webs of secret actions. If the twin towers fell in a carefully orchestrated demolition shortly after being hit by planes, who set the charges? Who did the planning? And how could hundreds, if not thousands of people complicit in the murder of their own countrymen keep quiet? Usually, Occam's razor intervenes.

Another common problem with conspiracy theories is that they tend to impute cartoonish motives to "them" — the elites who operate in the shadows. The end result often feels like a heavily plotted movie whose characters do not ring true.

Then there are other cognitive Do Not Enter signs: When history ceases to resemble a train of conflicts and ambiguities and becomes instead a series of disinformation campaigns, you sense that a basic self-correcting mechanism of thought has been disabled. A bridge is out, and paranoia yawns below.

Tuesday, April 13, 2010

Rationalizing Away from the Truth

- Recent moral psychology suggests that we often simply rationalize our snap moral judgments. (Or worse: we actually undercut our snap judgments to defend whatever we want to do.)

- The great public radio show Radio Lab devoted an entire show to the psychology of our moral decision-making:

- Humans' judge-first, rationalize-later approach stems in part from the two competing decision-making styles inside our heads.

- For more on the dual aspects of our minds, I strongly recommend reading one of the best philosophy papers of 2008: "Alief and Belief" by Tamar Gendler.

- Here's a video dialogue between Gendler and her colleague (psychologist Paul Bloom) on her work: